RiskTrack Ontology

for On-line Radicalization Domain

Authors:

M. Barhamgi, N. Faci, and

A. Masmoudi

February

28, 2018

1.

INTRODUCTION

In

this page, we present the core of RiskTrack domain

ontology. Our ontology defines the radicalization indicators along with a

wealth of information that are required for indicator computation. It also

defines and incorporates important information about existing terrorist

organizations and groups. Our ontology is extensible, i.e., domain experts can

extend our ontology with new indicators and domain knowledge. To define our

ontology, we have adopted the two-step methodology proposed in [1]. The first step

consists in collaboratively designing and organizing the domain ontology by

groups of experts. In this step, experts have reused, whenever possible,

relevant concepts from existing ontologies from the terrorism domain and

completed them with the ones that are relevant for the radicalization domain.

The second step consists in integrating the resulting domain ontology in a

semantic web tool. The tool built can be used for the ontology maintenance and

can be further exploited for the visualization and querying of radicalized

users’ data (e.g., personal information, detected radical messages). The first

step was carried out in top-down fashion. That is, we identified and defined

the key concepts (i.e., the most abstract ones), then we refined them into more

concrete concepts that represent specific knowledge or entities. Then, we

associated the relevant keywords to each one of the concepts defined.

2.

PRESENTATION OF RISKTRACK ONTOLOGY

We

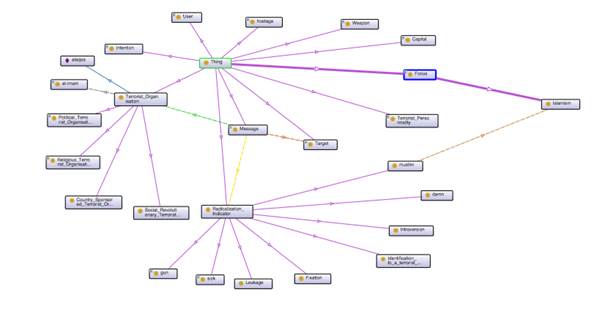

present in Figure-1 shows some of the key concepts of

our ontology. For clarity purposes, the figure does not show the properties of

defined concepts. The ontology is composed of three main parts: (i) user profile and messages (PART 1), (ii) the

radicalization indicators (PART 2), (iii) radicalization and

terrorism related concepts (PART 3). We subsequently detail all of

these parts.

Figure-1:

Overview of RiskTrack Ontology

PART

1- User profile and messages

This part represents the users of social networks and their personal information. The main concepts are the following:

- User:

The “User” concept represents the user of a social network. It has

different datatype properties such as “hasUserName”,

“hasLocaton”, “age”, “maritalStatus”,

“social-economicClass”, “religion”, “hasFollowersCount”, etc., that model the personal

information of a user. Such information is usually present in (or can be

inferred from) his or her online profile. It is linked to the “Message”

concept through the object property “HasPosted”.

- Message :

This concept represents the messages posted by a user. It has different data

properties such as “hasLength”, “hasTime” (that refers to the posting time), “hasNumberOfEllipses”, etc. This concept is central in

our ontology, it can be associated with any concept from the ontology such

as the radicalization indicators (e.g., when it contributes to the

computation of an indicator), terrorist organizations (e.g., when it

mentions a terrorist organization), weapons, etc.

- Focus :

The “Focus” concept represents the adopted attitudes and beliefs or the

communities the user is interested in such as “Jihadism”, “West”,

“Islamism” and “Discrimination”, which are defined as subclasses.

- Intention: The “Intention” concept, which has two

subclasses “Negativity” and “Positivity”, defines the nature of the

attitude or the emotions of a user towards a topic, which are expressed by

positive and negative words. The concepts Negativity and Positivity are

also decomposed into concepts that are more precise.

PART

2- Radicalization indicators

Our

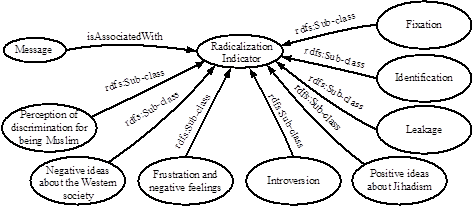

ontology defines a set of concepts to represent the radicalization indicators.

All indicators are derived of the concept “Radicalization-Indicator” which is

directly related to the “Message” concept through the property “isAssociated” (Figure-2).

Figure-2:

Considered radicalization indicators

We considered eight radicalization indicators that we have defined in our previous work [1, 2, 3] and in similar research work [4]. They can be categorized in two types: content-related and style-related. The former refers to the message content regardless of the user. It includes the indicators: ‘perception of discrimination for being Muslim’, ‘expressing negative ideas about the western society’, and ‘expressing positive ideas about Jihadism’, “fixation”, “identification” and “leakage”. The second type refers to the writing style that characterizes each user. It includes two indicators: ‘the individual is frustrated’ and ‘the individual is introverted’. The indicators are defined in Table-1.

|

Indicator |

Description

|

|

Expressing the fact that the

individual does not know what to do with his life. Frustration

refers to Irritability, predominantly negative reactions, and anxiety. |

|

|

Fixation is defined as any

behavior which indicates an increasing pathological preoccupation with a person

or a cause [14]. It can be computed by simply counting the relative frequency

of keywords relating to the entities of interest such as persons (e.g.,

Hitler, Jews), organizations (e.g., al-Qaeda), causes (e.g., Palestine-Israel

conflict), etc. |

|

|

It represents the identification

of an individual with a group (e.g., terrorist group) or cause. It can be

expressed through the usage of positive adjectives in connection with

mentioning the group or cause. |

|

|

Fixation is defined as any

behavior which indicates an increasing pathological preoccupation with a

person or a cause [14]. It can be computed by simply counting the relative

frequency of keywords relating to the entities of interest such as persons

(e.g., Hitler, Jews), organizations (e.g., al-Qaeda), causes (e.g.,

Palestine-Israel conflict), etc. |

|

|

Expressing the fact that the

individual does not like being the center of attention (e.g., when the

individual has only few interventions in group conversations, uses short

sentences or of moderate length and has few interpersonal relationships

). |

|

|

The individual expresses a

sensation of being discriminated because of his or her religion. |

|

|

The individual expresses negative

opinions about western countries and blames them for crises and wars in the

Muslim world. |

|

|

The individual expresses positive

ideas and opinions about Jihadism and terrorist acts and organizations. |

|

|

Leakage can be defined as the

communication of intent to do harm to a third party. Leakage usually signals

research, planning and/or implementation of an attack [6]. |

Table-1:

Radicalization Indicators

Each

indicator is associated with a set of keywords that have been defined by the

experts of Risk-Track (i.e., by linguistic experts, sociologists and

criminologists). In Risk-Track the values of indicators could be computed in

several ways as follows:

- Based on their keywords. : The value of the indicator is computed by

counting the frequency of their associated keywords then normalizing the

counts (this is applicable to most of indicators.);

- Based on the writing style : For example, the indicator ‘the individual is

introverted’ that refers to the introversion personality trait, does not

have keywords and is computed based on the length of sentences and the use

of ellipses in messages.

- By applying semantic inference. : Messages could be associated with some

indicators when a set of conditions are met. This association and the

satisfaction of conditions could be modelled as inference rules. In this

project we use SWRL (Semantic Web Rule Language) inference rules.

Before

going further, it is worth here to distinguish between an indicator as a

concept and its quantified value. For example, the following message “Support and love

for IslamicState from Kashmir” reflects a positive

stance towards the “Islamic State” which is one of the Jihadist

terrorist organization, and therefore it relates directly to the indicator “Positive Ideas

about Jihadism” and should be annotated with that concept. However, the value of an

indicator represents the likelihood of a given individual exhibiting an

indicator. In a perfect world, the value of the “Positive Ideas

about Jihadism” indicator for the individual who posted the message “Support and love

for IslamicState from Kashmir” would be “1”.

However, given the imperfection of existing data mining algorithms, that value

would be less than “1”, and it should be computed using the whole set of

messages posted by the individual, not only “Support and love

for IslamicState from Kashmir”. In the following,

we focus on when a message should be annotated with an indicator. The

annotation of a message with an indicator means that the message should be

included when computing the indicator value.

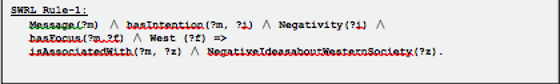

Inferring indicators by semantic inference

- Inferring the indicator “Positive Ideas About

Jihadism” : In order to annotate a message with the “Negative

Ideas about the western society” indicator, two disjoint conditions

must be satisfied. The first condition is that the message content should

express a negative intention that can be demonstrated by negatives actions

or emotions. The second condition is the presence of some keywords related

to western people or society (for example: West, US, USA, Europe, etc.). “I

detest western society” is an example of a message that satisfies the

specified conditions. In other words, the message should be already

associated (i.e., annotated) with the concepts “Negativity” and “West”.

To ensure the satisfaction of those two conditions (for annotating a

message with the concept “Negative idea about the West”), we model

them using an inference rule written in the Semantic Web Rule Language (SWRL)

as follows.

Rule-1 states that if a message (represented by

the variable “?m”) is associated with a concept

representing a negativity (by referring to one of the negativity concepts or

their associated keywords in its text content) and mentions one of the concepts

representing the West, then it should be considered as expressing a negative

idea about the West, i.e., associated with the indicator “Negative Ideas

about the western society”.

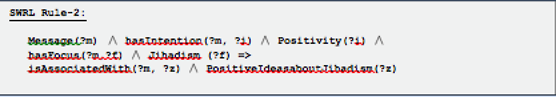

- Inferring the indicator “Negative ideas About

the West” : To associate

(i.e., annotate) a message with the “Positive Ideas About Jihadism”

indicator, the message content should contain positive verbs, adjectives

or adverbs that indicate user’s support to an idea or to an entity. The

message content must also refer to keywords or concepts related to the “Jihadism”

concept. “Jihadism” relates to several concepts in the ontology

such as, “Terrorist Organisation”, “Weapon”,

“Tactic”, “Terrorist Personality”, etc. To ensure the

satisfaction of the aforementioned two conditions (for annotating a

message with the concept “Positive Ideas about Jihadism”), we

model them using an inference rule written in SWRL as follows:

Rule-2 states that if a message (represented by

the variable “?m”) is associated with a concept

representing a positivity (by referring to one of the positivity concepts or

their associated keywords in its text content) and mentions one of the concepts

representing or associated with the concept “Jihadism”, then it should be

considered as expressing a positive idea about the Jihadism, i.e., associated

with the indicator “Positive Ideas about Jihadism”. For example, the

message “Support and love for IslamicState from Kashmir” exposes a positive

stance towards a terrorist organization and would be annotated with “Positive Ideas About Jihadism”, as the “Islamic State” is a concept (or

more accurately an instance of a concept) that relates to Jihadism.

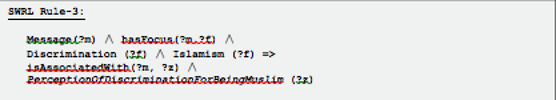

- Inferring the indicator “Perception of

discrimination for being Muslim” : To annotate a message with the “Perception of

discrimination for being Muslim” concept, two conditions must be

satisfied. The first is that the message should include Islamic keywords

(keywords related to the “Islamism” concept) while the second is the

message expresses discrimination feelings (by including keywords related

to the “Discrimination” concept). To ensure the satisfaction of those two

conditions, we model them using an inference rule written in SWRL as

follows:

For example, the message “Poor Muslims are

oppressed by US governments” would be annotated with this high-level

concept.

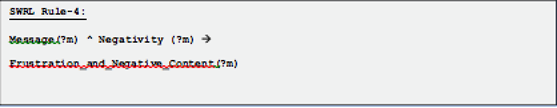

- Inferring the indicator ““Frustration and

negative Feeling” : A message is annotated with this concept when it

includes a negative content expressed through negative feelings (e.g.,

frustration, hate, guilt, and fault) or negative actions (e.g., kill,

explode, etc.). The following SWRL rule models those two conditions.

Note

that more semantic inference rules will be defined and included to cover the

complete list of radicalization indicators.

PART

3- Radicalization and terrorism related concepts

Our ontology reuses and extends the definition of concepts from several existing terrorism ontologies. Specifically, we reused and extended the following concepts:

- The “Terrorist Organization” concept: We

reused this concept from the Adversary Intent ontology [5] and refined it with several sub-concepts and

instances. For example, the following are all instances of the “Religious

Terrorist Organization”: “Islamic State of Iraq and Syria”,

“Hezbollah”, “Al-Nusra Front”,

“Al-Aqsa Foundation”, “Qaeda”, to name just a few. We

have defined the concept of “Terrorist Personality” to represent

high-profile individuals that have been associated with a terrorist

organization (e.g., Osama Ben Laden, Abu Bakr Al Baghdadi, etc.). We have

also associated these concepts with relevant keywords and abbreviations.

- The “Weapon” concept: We reused this

concept, along with its different sub classes, from the Terrorism ontology

in [7]. We have also associated these concepts with

relevant keywords and abbreviations. This part defines also important

concepts that can be used to characterize the content of messages such as

“Discrimination”, “Tactics” (to represent the tactics used for a terrorist

attack), “Negative Feelings”, “Negative Actions”, “Positive Feelings”,

etc.

REFERENCES

[1]

R. Lara-Cabrera, A. Gonzalez-Pardo, D. Camacho, “Statistical Analysis of Risk

Assessment Factors and Metrics to Evaluate Radicalisation

in Twitter”. Future Generation of Computer Systems - FGCS. Online, 4th November

2017. doi.org/10.1016/j.future.2017.10.046

[2]

R. Lara-Cabrera, A. Gonzalez-Pardo1, K. Benouaret, N.

Faci, D. Benslimane, and D. Camacho, Measuring the Radicalisation Risk in Social Networks. IEEE Access 2017.

[3]

A. Masmoudi, M. Barhamgi,

N. Faci, D. Benslimane, D. Camacho. “An

Ontology-based Approach for Mining Radicalization Indicators from Online

Messages”. The 32-nd IEEE International Conference on Advanced Information Networking

and Applications - T10-Internet of Things and Social Networking. Crakow, Poland, May 16-18, 2018.

[4]

F. Johansson, L. Kaati, and M. Sahlgren,

“Detecting linguistic markers of violent extremism in online environments,”

Combating Violent Extremism and Radicalization in the Digital Era, pp. 374–390,

2016.

[5]

P. Mullen, D. James, J.R. Meloy, M. Pathé, F. Farnham, L. Preston, B.

Darnley, B., & Berman, J. (2009). The fixated and the pursuit of public

figures. Journal of Forensic Psychiatry and Psychology, 20, 33-47.

[6]

K. Cohen, F. Johansson, L. Kaati, & Mork (2014). Detecting linguistic markers for radical

violence in social media. Terrorism and Political Violence, 26(1).

[7]

F. Baader, D. Calvanese,

D.L. McGuinness, D. Nardi, and P.F. Patel-Schneider,

The Description Logic Handbook: Theory, Implementation, and Applications.:

Cambridge University Press, 2003.